By Lena Brandl

At RRD, we do not do research by locking ourselves in an ivory tower to brood over the next scientific breakthrough. Part of our work is getting out into the world to meet other researchers and interested people and to discuss the progress of eHealth, while communicating our latest findings. The Supporting Health by Technology symposium brings together healthcare professionals, people from academia and organizations that develop eHealth - a perfect stage to present and discuss RRD's latest eHealth research with fellow colleagues across The Netherlands and beyond. For the 11th edition of the symposium, RRD colleagues Lena Brandl, Marian Hurmuz and Stephanie Jansen-Kosterink joined the event at Martini Plaza in Groningen, The Netherlands.

During the conference, current and important developments and challenges for eHealth were discussed:

- The world has seen a rapid increase in the development of individual eHealth applications. Google's Play Store and Apple's App Store nowadays offer a wide range of eHealth apps with varying degrees of functionalities and pricing for all sorts of health problems. But it is less clear how we can join forces and develop a global eHealth strategy to exploit technology's potential to improve modern healthcare.

- The inclusion of people from all regional, educational and ethnic backgrounds, including people who suffer from more than one disease (called multi-morbidity) is crucial for developing eHealth that actually helps people manage their health problems in everyday life. How can we include difficult-to-reach groups in eHealth research, and thereby prevent that the technology we develop makes today's digital divide worse?

- What is the state of machine learning in eHealth, what tasks can it do and how can it be optimized for supporting healthcare professionals in their work?

These are some of the questions addressed at Supporting Health by Technology. RRD contributed to the discussion by presenting some of our recent eHealth research:

- Marian Hurmuz presented the results of a social robot acceptance study conducted with patients and nurses in the Roessingh rehabilitation center, summarizing their acceptance and intention to use the social robot for daily care activities (SCOTTY project).

- Stephanie Jansen - Kosterink demonstrated the value of the SROI (Social Return on Investment) method to access the societal impact of innovations in healthcare and how the method can help decide whether the societal impacts of employing a social robot in rehabilitation care outweigh the robot's monetary investments (SCOTTY project).

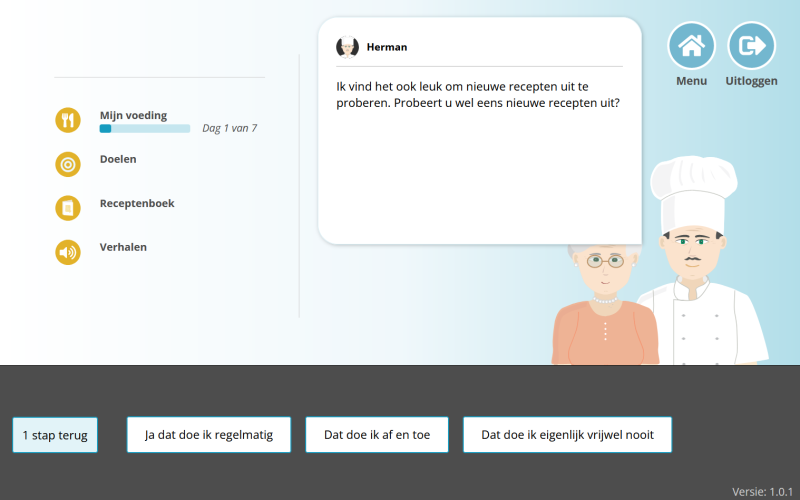

- Lena Brandl presented an automatic decision-making algorithm using a method called Fuzzy Cognitive Maps (FCMs) in a self-help eHealth service for older mourners. The aim of the decision making algorithm is to guide the older mourner to offline support in case they find themselves in need of support beyond the online service (LEAVES project).

With new ideas and questions buzzing in our heads, we return to RRD to continue our work on eHealth!